🚀 Future Explored: Controlling the trolls

A guide to protecting your online presence.

It’s 2025, and a video you made for your YouTube channel just went viral. You’re now one step closer to being able to quit your day job, but you’re also now “internet famous,” and the trolls are coming for you. Thankfully, there’s a way to keep them from getting in your head or, even worse, finding and spreading details about you online that could get you harmed IRL.

Want to read this article on our website? Click here.

Online safety

By Kristin Houser

Social media has changed the world. It’s given us a new way to share bits of ourselves — or just our favorite memes — with others and helped us build and maintain the kinds of relationships that are vital to positive mental health.

It’s also enabled new forms of activism, helped people advance their careers, and even led to the creation of entirely new ones (those viral videos aren’t going to make themselves).

Unfortunately, social media can also be weaponized, with bad actors taking advantage of the platforms and the data they collect on users to harass, stalk, exploit, and harm others — both mentally and physically.

To find out what can be done to stop this, let’s look back at the history of online safety and the startup fighting to make sure you can take advantage of all the benefits of social media without having to worry about getting harassed — or worse.

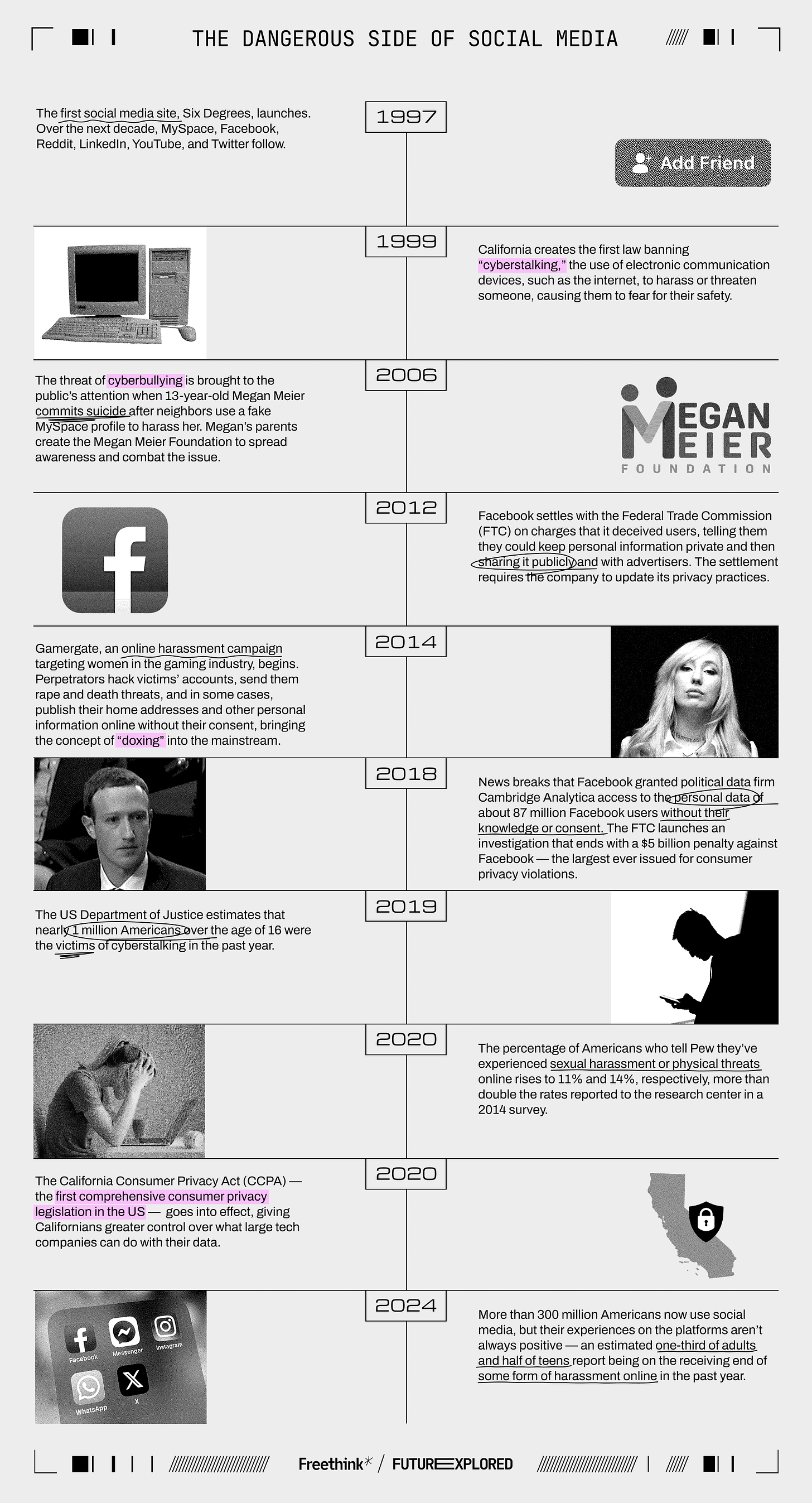

Where we’ve been

Where we’re going (maybe)

Software engineer Tracy Chou has experienced the best and the worst of social media.

In 2013, when Chou was the tech lead at Pinterest, a blog post she wrote to highlight the tech industry’s lack of transparency surrounding diversity data went viral. As she would later write, this made her an “accidental spokesperson for the diversity in tech movement.”

Over the next decade, Chou would co-found a diversity advocacy group, speak about diversity at countless conferences, and be named one of TIME’s Women of the Year. She’d also amass a large social media following, and this increased visibility online opened her up to significant abuse and harassment.

“It was a whole range, from garden variety sexism and racism to coordinated attacks that originated from Reddit and 4chan,” Chou told Freethink. “I also dealt with targeted harassment and stalking where I did have threats to my physical safety, and I had to go to law enforcement a number of times.”

Social media platforms have content moderators — people tasked with determining whether posts violate a company’s policies — but when Chou reported the online harassment to them, she was told that what users were writing to her online didn’t qualify as abusive behavior. The perpetrators were free to continue their attacks.

Despite these experiences, Chou not only didn’t delete her social media accounts, she didn’t really want to — she knew that the platforms could be a force for good, even if some people used them to do harm.

“This was fallout from using platforms in a very positive way, to get a message out and build an audience and push for change,” she told Freethink, “so I have both the optimism around what is possible with technology and then also the realism around how this technology is getting used right now and the ways in which [it] can adversely impact our society.”

As a software engineer, Chou also had ideas for how tech could be used to help others enjoy the positive aspects of social media while minimizing the negative. In 2018, she founded Block Party to help her bring those ideas to life.

The startup’s first product was an app that helped people automatically filter out harassment and unwanted content on X (then Twitter), but it was forced to pivot when X began charging for access to its API in 2023.

Third-party developers need access to X’s API in order to interface with the platform and create tools that can be used on it — these can be anything from a filter, like what Block Party built, to a chatbot that automatically responds to a company’s DMs. Block Party (and many others) couldn’t afford to pay for API access, so it was forced to put its X tool on indefinite hiatus.

The startup’s new product is a browser extension that tackles online safety from another angle, helping users control who has access to their data on nine different social media platforms, including X, Facebook, and Instagram.

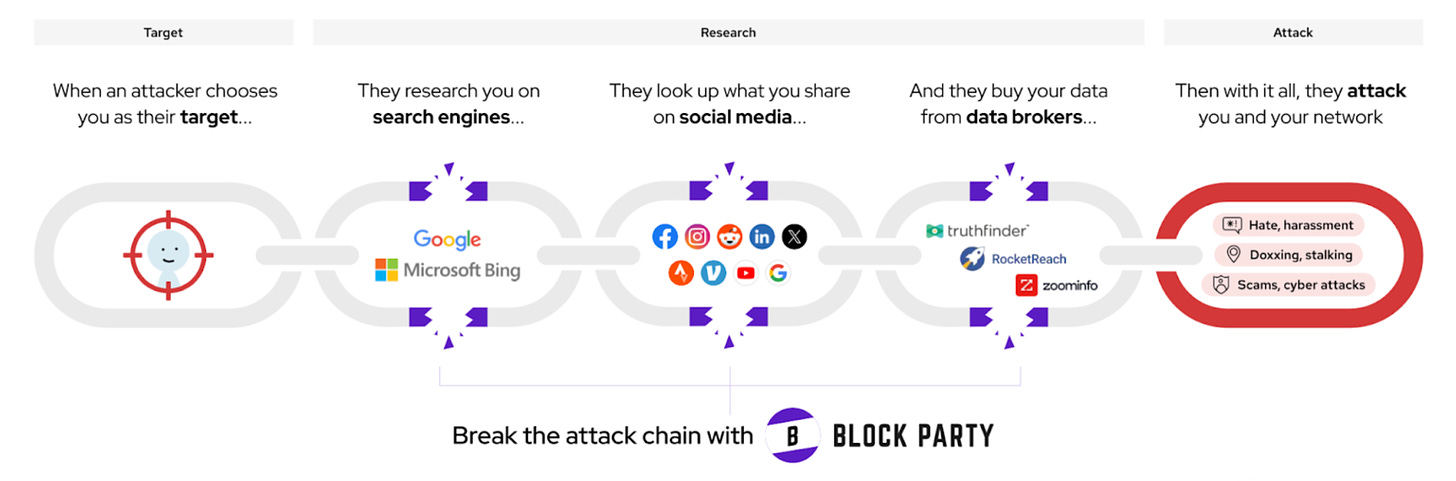

“The idea here is we're trying to help people prevent their online presence from being weaponized against them,” Chou told Freethink. “There's a lot of different ways in which this can happen. It can be from data overexposure that means you might get doxed or experience other threats to your physical safety, or it could just be other information that gets pulled out from your past to be used against you.”

While it is possible for a person to manually lock down their personal data on social media, it isn’t always easy.

Between the nine platforms that Block Party works with, there are more than 200 settings controlling who can access your data. These settings determine which of your photos are visible to the public, whether or not a platform can sell your information to data brokers (who can then sell it to others), and more.

The number and locations of these settings change regularly, and it can be in the platforms’ interest that you don’t know how to find and adjust them.

"[S]ocial media and video streaming companies harvest an enormous amount of Americans' personal data and monetize it to the tune of billions of dollars a year," said Lina Khan, chair of the Federal Trade Commission (FTC). "While lucrative for the companies, these surveillance practices can endanger people's privacy, threaten their freedoms, and expose them to a host of harms, from identity theft to stalking."

For $20 a year, Block Party will scan your accounts on the nine platforms and flag any exposure risks, such as having location information attached to your X posts. It’ll then make recommendations on how to secure your personal information and “clean up” your profiles, by bulk deleting old photos or tweets, for example.

It will also make recommendations for your notifications that can help you avoid harassment. On X, for example, Block Party might ask if you want to mute notifications from accounts that have a default profile photo, as those accounts are more likely to engage in trolling behavior, according to the startup.

You choose the recommendations that sound right for you, and Block Party will automatically make the necessary changes to your account or give you step-by-step instructions on how to make them yourself. It keeps track of any updates to the companies’ privacy settings, too, making new recommendations as necessary.

Chou is far from the first person to identify the need for some way to prevent the weaponization of social media, but historically, the assumption has been that the only way things would get better is if platforms themselves did more to protect users — by improving how they moderate content, for example — or if legislators forced them to do more.

Block Party shows that “there's some space for individuals to take more control and do things that are within their power to improve their experience,” Chou told Freethink — but that doesn’t mean social media companies and governments should be let off the hook.

Passing laws that require companies larger than a certain size to have open APIs is one way legislators could help ensure tomorrow’s internet is safer and more welcoming than today’s, according to Chou. With that access, a third party could develop a tool that alerts parents if their child is being bullied on a platform, for example, or that automatically filters hate speech out of a person’s feed.

“Right now, the platforms have very much locked down the experience,” said Chou. “They are fully in control. Your Facebook feed is what Facebook wants to show you. Your Instagram feed is what Instagram wants to show you.”

We can get a glimpse at what an alternative to this status quo would look like through Bluesky, an X-like social media platform that allows users to create custom feeds or subscribe to feeds created by others.

“[Bluesky] showcases a different world that is possible when users have more choice and flexibility in building these different things,” said Chou. “Legislation can force platforms to have the sort of open infrastructure that Bluesky has.”

Open APIs aren’t without their drawbacks — it’s possible that custom feeds could make the problem of echo chambers worse, and by giving third parties access to users’ data, we may be opening ourselves up to more data harvesting or breaches.

Legislation that forces social media platforms to give users more control over their data could help mitigate the latter issue and increase online safety, too.

Currently, 20 states have consumer data privacy laws on the books and a handful of others are considering such legislation. There isn’t one federal law that provides comprehensive data protection, though, and even existing state laws could do more to protect users’ data.

“Within the US, if you put aside California, which has CCPA, people do not have very good data rights — data is being bought and sold every which way,” said Chou.

“Right now, some of the legislation means that you at least can opt out … but it's a constant battle to try to get your data out — the defaults are just so strong it's near impossible to fight back,” she continued. “It would be better if systems were forced to be opt-in.”

For legislation to be effective, though, “policymakers will need to ensure that violating the law is not more lucrative than abiding by it,” according to the FTC’s Khan, who pointed out at the DCN Next: Summit in February that companies have been known to treat fines of million or even billions of dollars as a “cost of doing business” in the past.

Passing legislation is a slow process, though, and with a new administration taking over in Washington, it’s hard to say when or even if social media users can expect the federal government’s help in protecting themselves from harm online.

For now, Chou is focused on making Block Party the best shield she can against the negative aspects of social media, which means working to improve its existing functionality while also expanding it to include more platforms, including Amazon and ones that tend to be used by younger people, such as Discord, TikTok, and Snapchat.

“Right now, online feels like the Wild West,” Chou told Freethink. “You have no protection. You're just out there, and it's very unsafe.”

“There's a lot of this anxiety and fear around how what you're doing might get used against you or what you might encounter,” she continued. “If we are successful, we'll be able to convert it to a world where people can feel free to not worry about those things anymore.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at tips@freethink.com.

Kristin Houser is a staff writer at Freethink, where she covers science and tech.